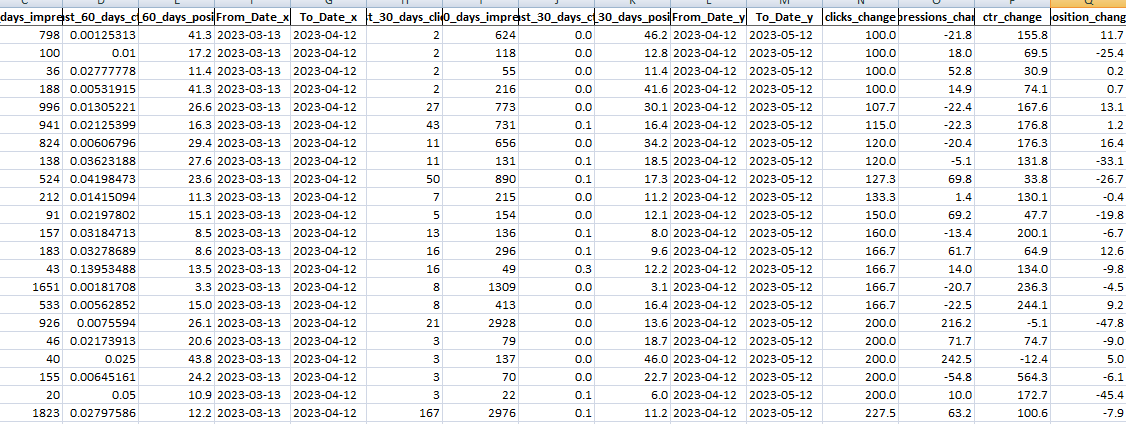

In this tutorial, you will learn how to fetch page link metrics like page rank, impressions, clicks and CTR from Google Search Console and download it to an xlsx Excel file. Using the downloaded xlsx report we can easily compare the previous data and compare it with the latest to see which pages are showing good growth in recent times and which ones losing their clicks and rank. So that you can have a look at them to improve your rankings.

We will guide you on how to create a Python script that generates a Google Search Console report and exports it to an Excel file. This report will show search analytics data over two different periods: the last 30 and 60 days or any time you want to compare with any specified dates.

The important thing is to learn how to connect with Google Search Console to fetch data after the proper authentication method by running a Python script in your CMD terminal. This script is going to provide the most important metrics which are generally very difficult to see in Search Console Tool itself.

Let’s get started and learn how to download a detailed Google Search Console report in your load with all matrics:

How to Download Data from Google Search Console and See Most Growing and Losing Pages of your site?

Follow these quick steps to create a Python script that will authenticate and download Google Search Console data in the desired format and download it in XLSX file format:

Prerequisites

To authenticate your Python script with Google Search API, create XLSX file and other such important operations we need to install some required Python libraries, make sure you have the following installed:

- Python 3.6 or later

- Google API Python Client

- Google Auth Library

- Pandas

- Openpyxl

You can install these packages using pip:

pip install --upgrade google-api-python-client google-auth-httplib2 google-auth-oauthlib pandas openpyxl

Step 1: Google Search Console API Setup

To connect to the Google Search Console API, you need to create credentials in the Google API Console. This involves creating a new project, enabling the Search Console API, and creating credentials.

- Visit the Google API Console

- Create a new project

- Enable the Search Console API for your project

- Create credentials (OAuth client ID) for a Desktop application

How to download the credentials JSON file?

In our Python script, we need to pass the credentials via JSON file. This JSON credential file can be easily downloaded from your Google Cloud Console project. To get a client_secrets.json file, you need to create a new project in the Google Cloud Console and enable the Search Console API for it. Here are the detailed steps:

- Go to the Google Cloud Console at https://console.cloud.google.com/.

- If you haven’t created a project yet, you can create one by clicking on

Select a projectat the top of the page, then NEW PROJECT. If you already have a project, you can use that. - Once you have a project, go to the Dashboard (on the left sidebar).

- Click on ENABLE APIS AND SERVICES (it’s usually at the top of the page).

- Search for “Search Console API” and select it, then click

ENABLE. - Once the API is enabled, go to the

Credentialssection (on the left sidebar under APIs & Services). - Click on

CREATE CREDENTIALSand selectOAuth client ID. - If you haven’t configured the OAuth consent screen yet, you’ll need to do so. You can just fill in the required fields, set the

User TypetoExternal, and save. - In the

Create OAuth client IDscreen, selectDesktop appfor theApplication type, then clickCREATE. - You should now see your

client IDandclient secret. ClickOK. - Now, on the

Credentialspage, you should see your newOAuth 2.0 Client ID. On the right side of the row, click the download icon to download theclient_secrets.jsonfile.

Now you have the client_secrets.json file. You can use it in your Python script to authenticate with the Search Console API. Save the credentials file in your project directory and note down the filename.

Step 2: Importing Required Libraries

At the beginning of our Python script, we will start by importing the necessary libraries.

from googleapiclient.discovery import build

from google_auth_oauthlib.flow import InstalledAppFlow

from datetime import datetime, timedelta

import pandas as pd

from openpyxl import Workbook

Step 3: Authentication and Service Creation

Next, we’ll authenticate using the credentials file and create a service to interact with the Search Console API.

In step 1, we have already downloaded the required JSON file for example client_secrets.json. Replace client_secrets.json with the filename of your credentials file.

flow = InstalledAppFlow.from_client_secrets_file('client_secrets.json', ['https://www.googleapis.com/auth/webmasters.readonly'])

credentials = flow.run_local_server(port=0) # Use run_local_server method here

service = build('webmasters', 'v3', credentials=credentials)

Step 4: Defining Helper Functions

In our Python script, we will define some helper functions that will help us format the data we will receive from Google Search Console. Following are several helper functions to get the search console data, calculate percentage changes, and generate an Excel file.

Get the Date of N Days Ago

def get_date_n_days_ago(n):

return (datetime.now() - timedelta(days=n)).strftime('%Y-%m-%d')

Get Search Console Data

def get_search_console_data(service, site_url, start_date, end_date, label):

request = service.searchanalytics().query(

siteUrl=site_url,

body={

'startDate': start_date,

'endDate': end_date,

'dimensions': ['page']

}

)

response = request.execute()

df = pd.DataFrame(response['rows'])

df['keys'] = df['keys'].apply(lambda x: x[0]) # Convert lists in 'keys' column to strings

df.columns = [label + '_' + col if col != 'keys' else 'keys' for col in df.columns]

return df

Calculate Percentage Change

def calculate_percentage_change(data):

data['clicks_change'] = ((data['last_30_days_clicks'] - data['last_60_days_clicks']) / data['last_60_days_clicks']) * 100

data['impressions_change'] = ((data['last_30_days_impressions'] - data['last_60_days_impressions']) / data['last_60_days_impressions']) * 100

data['ctr_change'] = ((data['last_30_days'] - data['last_60_days']) / data['last_60_days_ctr']) * 100

data['position_change'] = ((data['last_30_days_position'] - data['last_60_days_position']) / data['last_60_days_position']) * 100

return data

Generate an Excel File

def generate_excel_file(data_old, data_new, filename):

merged_data = pd.merge(data_old, data_new, on='keys')

merged_data = calculate_percentage_change(merged_data)

print(merged_data.columns)

merged_data.to_excel(filename, index=False)

Step 5: Fetching Data and Generating the Excel File

Finally, we’ll fetch the search console data for the last 30 and 60 days and generate an Excel file with the report.

Replace sc-domain:yourwebsite.com with the property that you want to fetch the data from. The property should be added to your Google Search Console account.

# Your site URL

site_url = 'sc-domain:yourwebsite.com'

# Get data for the last 60 and 30 days

data_last_60_days = get_search_console_data(service, site_url, get_date_n_days_ago(60), get_date_n_days_ago(30), 'last_60_days')

data_last_60_days['From_Date'] = get_date_n_days_ago(60)

data_last_60_days['To_Date'] = get_date_n_days_ago(30)

data_last_30_days = get_search_console_data(service, site_url, get_date_n_days_ago(30), get_date_n_days_ago(0), 'last_30_days')

data_last_30_days['From_Date'] = get_date_n_days_ago(30)

data_last_30_days['To_Date'] = get_date_n_days_ago(0)

# Generate the Excel file

generate_excel_file(data_last_60_days, data_last_30_days, 'search_console_data.xlsx')

After adding all the required sections we discussed above, the complete Python script will look like this:

from googleapiclient.discovery import build

from google_auth_oauthlib.flow import InstalledAppFlow

from datetime import datetime, timedelta

import pandas as pd

from openpyxl import Workbook

# Get the date of n days ago

def get_date_n_days_ago(n):

return (datetime.now() - timedelta(days=n)).strftime('%Y-%m-%d')

# Get the Search Console data of a specific site and date range

def get_search_console_data(service, site_url, start_date, end_date, label):

request = service.searchanalytics().query(

siteUrl=site_url,

body={

'startDate': start_date,

'endDate': end_date,

'dimensions': ['page']

}

)

response = request.execute()

df = pd.DataFrame(response['rows'])

df['keys'] = df['keys'].apply(lambda x: x[0]) # Convert lists in 'keys' column to strings

df.columns = [label + '_' + col if col != 'keys' else 'keys' for col in df.columns]

return df

def calculate_percentage_change(data):

data['clicks_change'] = ((data['last_30_days_clicks'] - data['last_60_days_clicks']) / data['last_60_days_clicks']) * 100

data['impressions_change'] = ((data['last_30_days_impressions'] - data['last_60_days_impressions']) / data['last_60_days_impressions']) * 100

data['ctr_change'] = ((data['last_30_days'] - data['last_60_days']) / data['last_60_days_ctr']) * 100

data['position_change'] = ((data['last_30_days_position'] - data['last_60_days_position']) / data['last_60_days_position']) * 100

return data

# Generate Excel file with two datasets

def generate_excel_file(data_old, data_new, filename):

merged_data = pd.merge(data_old, data_new, on='keys')

merged_data = calculate_percentage_change(merged_data)

merged_data.to_excel(filename, index=False)

# Authenticate and create a service

flow = InstalledAppFlow.from_client_secrets_file('client_secret.json', ['https://www.googleapis.com/auth/webmasters.readonly'])

credentials = flow.run_local_server(port=0) # Use run_local_server method here

service = build('webmasters', 'v3', credentials=credentials)

# Your site URL

site_url = 'sc-domain:your-site-domain.com'

# Get data for the last 60 and 30 days

data_last_60_days = get_search_console_data(service, site_url, get_date_n_days_ago(60), get_date_n_days_ago(30), 'last_60_days')

data_last_60_days['From_Date'] = get_date_n_days_ago(60)

data_last_60_days['To_Date'] = get_date_n_days_ago(30)

data_last_30_days = get_search_console_data(service, site_url, get_date_n_days_ago(30), get_date_n_days_ago(0), 'last_30_days')

data_last_30_days['From_Date'] = get_date_n_days_ago(30)

data_last_30_days['To_Date'] = get_date_n_days_ago(0)

# Generate the Excel file

generate_excel_file(data_last_60_days, data_last_30_days, 'search_console_data.xlsx')

Step 6: Run Python Script and Download the XLSX File

You can execute the Python script for example if it is having name search_google.py then execute the following:

python search_google.pyAfter executing the above script, you will be redirected to the Google Authentication page, where you need to select the Google account in which you have the above domain registered in the Google Search console. After auth is complete you can close the browser window to complete the download in the same root directory.

An Excel file named search_console_data.xlsx will be created in the project directory with the search console data of the last 30 and 60 days.

And that’s it! You now have a Python script that fetches data from the Google Search Console API and generates an Excel report. You can run this script regularly to monitor the search performance of your website.

Conclusion

By using the Google Search Console’s data we can have a deeper understanding of our website’s presence in Google’s search results. It grants us access to insights including clicks, impressions, CTR, and position, using which we can easily optimize the performance to the next level.

In this tutorial, we have gone through the steps required to access this data programmatically using Python, Google’s Search Console API, and OAuth 2.0 for authentication. The script is designed in a way that retrieves the data for the last 30 and 60 days and calculates the percentage change for the key metrics. Then the final output is downloaded as an Excel file that consolidates all this information.

This automates this process and saves a lot of time and effort in manually extracting and analyzing the data. You can extend this script to suit your specific needs, such as changing the date ranges or the metrics acording to your needs. Furthermore, you could also schedule this script to run periodically to always keep your SEO data up-to-date.

Make sure to replace 'your-site-domain.com' with your actual site domain in the script, and ensure you have the necessary permissions to access the Search Console data.

Hope this will be helpful in analysing your site’s matrics in a better way.